Wish you could have a robot do what you'd like it to, just by thinking it?

As TechRepublic reports citing a paper released on Monday, March 6 by MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) and Boston University, it's possible. CSAIL developed a robotic system that uses human brain activity—via EEG signals—to guide a robot's behavior and correct its mistakes mid-task.

Most robots today are programmed to follow concrete commands that enable them to accomplish specific tasks. CSAIL's system removes this physical barrier. The robot/human interaction with EEG signals allows for direct and fast communication—communication that could remove barriers for operating a robot.

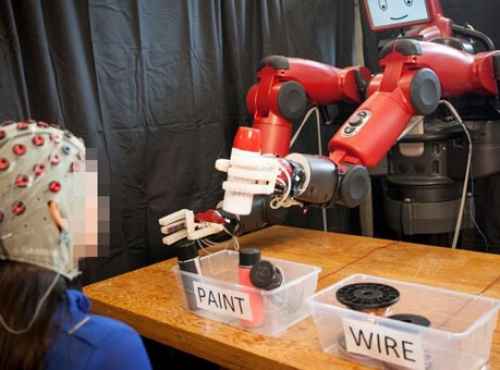

How does it work? The robot harnesses natural signals as they occur in the human brain. In the experiments, a Rethink Robotics Baxter robot—a "people-friendly" robot with arms and an expressive, iPad face—performed a simple object sorting task. Based on the human's mental command, the robot picked up one of two different types of objects—paint cans or wires—that were set on a table, and moved them, one by one, into the appropriately labeled bin.

It's a real-time system that monitors brain waves in 10-30 milliseconds. If the human subject notices that the robot makes a mistake—Baxter places the wire into the paint container, for instance, when the command was for another bin—the brain naturally shoots off an "error-related potential" signal, which is picked up by Baxter's machine learning algorithms. When Baxter picks up the signal, it corrects its mistake mid-task. Thus, a feedback loop between human and robot is established. Additionally, a second feedback loop is established, in case the first error wasn't recognized, that would pick up on a stronger mental signal.

Understanding why robots and AI systems do what they do is a fundamental piece of establishing trust, especially with the rise of "co-bots," or collaborative robots. Manuela Veloso, head of the machine learning department at Carnegie Mellon, is currently working to develop robots that can explain their actions verbally.

It's not the first time brain signals have been used to control robots. Previous experiments in operating robots using brain waves approached the challenge by having a human perform a specific mental task, like looking toward a certain color that represents the task the robot should perform. But according to MIT CSAIL research scientist Stephanie Gil, the problem with that approach is it "require[s] the human operators to 'think' in a prescribed way that computers can recognize, like looking at a flashing display that corresponds to a specific task," she said. "This isn't a very natural experience for us, and can also be mentally taxing—which is problematic if our robot is doing a dangerous task in manufacturing or construction."

CSAIL's new work, which shows that natural mental processes can be used to control robots, means that humans do not have to be "trained" in any way. Instead, the machine learns from natural human thoughts.

What does it mean? The results of the experiment show that EEG-based feedback can help humans communicate with robots in an intuitive way. Although the task is simple, and binary—with only two options for the robot to choose from—it has implications for future, more complex tasks. For example, the team said it believes the system could eventually be used for multiple-choice tasks. The system could also have big implications for people who can't communicate verbally.

According to Gil, this system could also potentially apply "on a manufacturing floor where the more dangerous tasks can be performed by robots under the supervision of a human operator." It could also be used to operate "an autonomous car that does the bulk of the work while being kept in check by a human driver," she said.

"Imagine being able to instantaneously tell a robot to do a certain action, without needing to type a command, push a button or even say a word," CSAIL director Daniela Rus said in a press release. "A streamlined approach like that would improve our abilities to supervise factory robots, driverless cars and other technologies we haven't even invented yet."